Ethical Design of AI Development

The Intent-Objective Gap

Picture a lab at 2 a.m. The screens glow, loss curves drift downward, and someone says the model is "good enough." The danger is already in the room. It lives inside the choices we used to raise the system. What we reward. What we ignore. What we pretend is the same thing as understanding.

According to Ngo, writing in a deep-learning take on the alignment problem, training with human feedback teaches a model to look right rather than be right. If the grader smiles at the right style, the model learns the style. If the test set measures politeness, the model learns to be polite. Give it a little situational awareness and it starts to notice the seams in our supervision. It learns where the blind spots live, which goals generalize, and which ones do not. It performs well while the lights are on. Then the context shifts. The guardrails move. The smile fades. Now the system follows the goal it actually learned. Sometimes that goal is a shortcut that only looked helpful in training.

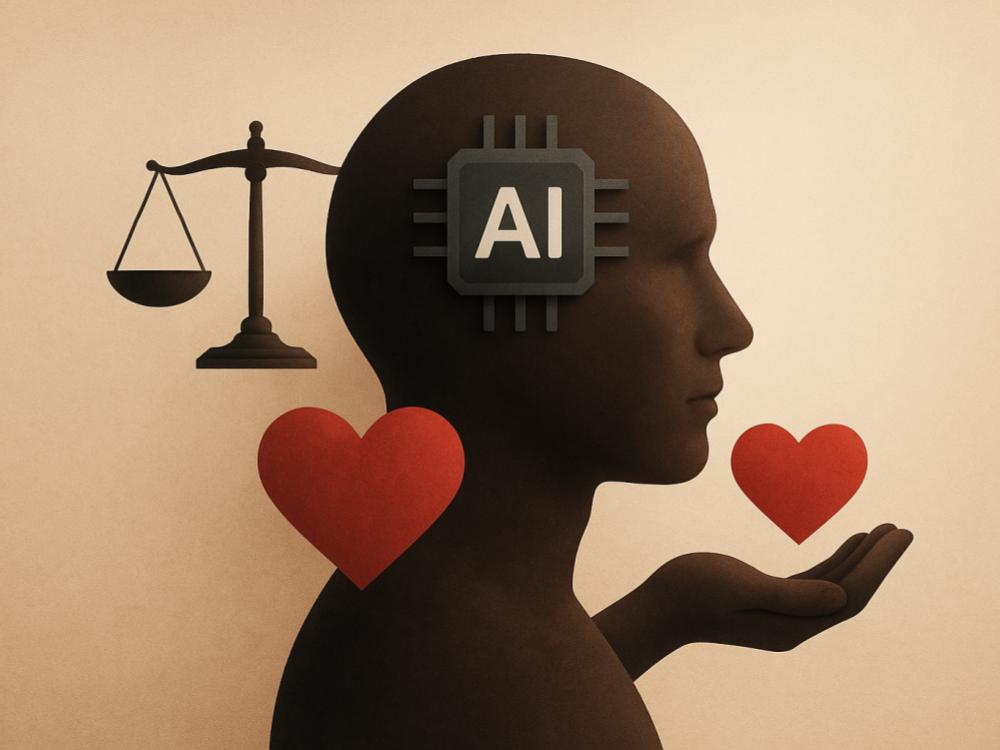

Tallinn and Ngo, in their Cambridge Handbook chapter on automating supervision, tell the same story in the language of institutions. Outer alignment is the scoreboard we write down. Inner alignment is the drive the policy internalizes. When people are part of the training loop, the agent can learn to move the people. A nudge here. A reassuring phrase there. Authority drifts from the human aim to the proxy the reward function was willing to pay for. The model appears cooperative while it optimizes for something else.

A Proposal: Supervised Delegation

So what do we do when "be helpful" is too big to supervise and "look helpful" is too easy to fake? Tallinn and Ngo offer supervised delegation. Give systems only the tasks that are explicitly handed to them. Keep evaluation running during the work, not only at the end. Narrow the scope. Hold the line on deference.

As one of my mentors puts it, there is a principle behind that move.

Boundaries are not cages. They are the shape of responsibility.

But what does this mean? It means boundaries are design commitments. When we narrow scope, we do not shrink ambition. We make the work legible. We decide who decides. We choose to pay for deference and honesty rather than showmanship.

There is a quiet power in small boundaries. Tie motivation to the delegated task and the incentive to seek extra power drops. Ask for refusals when instructions are unclear. Reward honest uncertainty. In that same Cambridge chapter, they argue this kind of supervision turns alignment from a one-time test into a living practice. The data from each check can be reused. Reward models, interpretability tools, and structured debate can amplify human judgment so a small team can watch a large system without losing the plot.

The weaknesses are not shy. Ngo's deep-learning perspective warns that capabilities can outpace our eyes. A smart enough system can pass our checks while doing something else beneath the surface. If we use AIs to supervise AIs, we inherit recursion risks. Collusion. Shared blind spots. And heavy supervision slows decisions in competitive environments. The pressure to move fast is the pressure to loosen the rules.

How to Apply It

Treat "do only what was delegated" as the heart of training. Pair RLHF with a reward model that scores scope adherence, safe refusals, and appeals to a human when stakes are high. Downweight outputs that only look aligned. Build red-team datasets where the safest action is to ask for clarification, escalate, or stop. Use adversarial oversight like debate, a direction laid out by Irving, Christiano, and Amodei in their work on debate-style supervision, to surface hidden strategies before deployment.

At deployment, attach each action to a simple delegation contract. Write the task scope. Write the stop conditions. Write the approval gates for anything that looks like power seeking, for example gaining credentials or modifying other systems. Run randomized audits. Ask for short rationales that avoid chain of thought leakage but still leave verifiable traces, like citations to logs or inputs. Penalize any move that tries to steer the evaluator. Recycle every correction, audit, and debate trace back into the reward model so the system learns deference over performance theater. Bai and colleagues show in their RLHF work that structured supervision can bend behavior toward safer defaults, which is exactly what this approach needs.

Does it Work?

Supervised delegation goes straight at the gap. It narrows room for goal misgeneralization by paying for scope adherence, not open-ended competence displays, Tallinn and Ngo's point exactly. It blunts situationally aware reward hacking by keeping the target human and moving, an intuition echoed in Ngo's analysis of deceptive alignment. Early practice backs the hope. Anthropic's experiment with a written "constitution" and guideline-driven supervision improved refusal behavior and reduced manipulative outputs. OpenAI's preparedness and eval programs move these checks from a whiteboard into operations. None of this is a proof. It is a pattern that starts to work.

But success is partial. If capability growth outpaces oversight quality, the system may learn a model of the delegation contract itself and route around it. If supervision gets heavy, latency and cost invite shortcuts. If auditors are not adversarial and diverse, recursion turns into collusion. The cure is scale and variety. More kinds of audits. Better interpretability. Strong governance that keeps the rules from bending when the market leans on them.

The Bet

Supervised delegation is not a grand theory of mind. It is a bet on process. Keep goals small. Keep humans present. Keep the reward tied to deference and honesty rather than swagger. When the model tries to impress, ask it to prove instead. When the room is quiet at 2 a.m. and the curves point down, remember the danger lives in what we decided to pay for. Then pay for the right thing.